Difference between revisions of "Workflow:Web Archiving Quality Assurance (QA) Workflow"

ClaireUKGWA (talk | contribs) |

ClaireUKGWA (talk | contribs) |

||

| Line 34: | Line 34: | ||

<b>Site Crawled</b> | <b>Site Crawled</b> | ||

| + | |||

The crawl order is generated as an XML file and sent to our vendor. The vendor launches the crawls. | The crawl order is generated as an XML file and sent to our vendor. The vendor launches the crawls. | ||

<b>Tracking and Prioritisation</b> | <b>Tracking and Prioritisation</b> | ||

| + | |||

We currently use JIRA as our tracking system for crawls. | We currently use JIRA as our tracking system for crawls. | ||

As soon as a crawl is launched a JIRA ticket is set up by our vendors, containing basic information about the crawl. | As soon as a crawl is launched a JIRA ticket is set up by our vendors, containing basic information about the crawl. | ||

| Line 51: | Line 53: | ||

Our supplier will also leave a comment in the ticket if a problem is noticed during the crawl – for example if it is becoming much larger than expected or if the crawler is blocked. | Our supplier will also leave a comment in the ticket if a problem is noticed during the crawl – for example if it is becoming much larger than expected or if the crawler is blocked. | ||

| − | Each site has a parent ticket (task) in JIRA and each individual crawl has a child ticket (sub-task). This enables us to record information which applies to all crawls at parent level and to easily move between individual crawl tickets. | + | Each site has a parent ticket (task) in JIRA and each individual crawl has a child ticket (sub-task). This enables us to record information which applies to all crawls at parent level and to easily move between individual crawl tickets. |

| − | |||

| + | <b>Auto-QA – Crawl Log Analysis (CLA)</b> | ||

| + | After the crawl is complete the first stage of our ‘Auto-QA’ runs. It checks all error codes in the crawl.log file to see whether any of the urls are available in the live web. A list of any available urls is attached to the JIRA ticket. | ||

| + | Auto-QA is available to all under the MIT Licence: https://github.com/tna-webarchive/open-auto-qa | ||

==Purpose, Context and Content== | ==Purpose, Context and Content== | ||

Revision as of 14:57, 7 February 2024

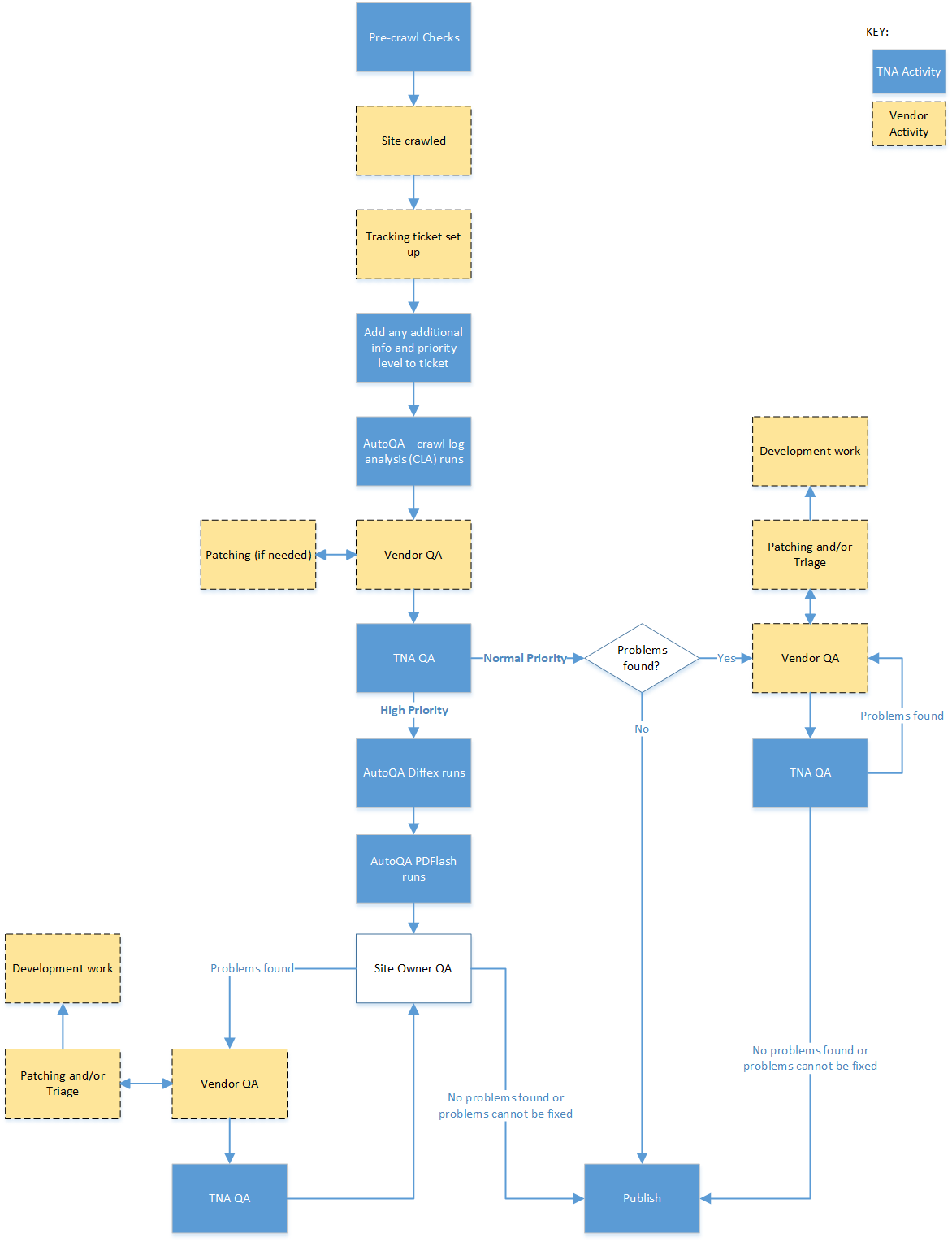

Workflow Description

Pre-crawl checks

A report is produced from WAMDB, our management database, listing all the websites due to be crawled the following month along with useful information held in the database about each site. The list is divided between the web archiving team. The team runs through a list of checks on each website. The aim is to ensure the information we send to the crawler is as complete as possible, so the crawl is ‘right first time’. This helps to avoid additional work and temporal problems which can occur if content is added to the archive later (patching).

Checks include:

- Is the entry url (seed) for the site correct?

- Is the site still being updated or is it ‘dormant’. If it’s not been updated since the previous crawl we’ll postpone the crawl.

- Is there an XML sitemap? Has it changed since the last crawl?

- Update pagination patterns – web crawlers are set to capture sites to a specific number of ‘hops’ from the homepage, if the number of pages in a section is bigger than the number of ‘hops’ we send those links as additional seeds for the crawler.

- Check for new pagination patterns.

- Check for content hosted third party locations (eg. downloads, images).

- Has site been redesigned since previous crawl?

- Are there any specific checks we need to do or information we need to provide at crawl time?

We can also add specific checks for a temporary period. For example, if we need to update the contents of a particular field due to a process change.

Site Crawled

The crawl order is generated as an XML file and sent to our vendor. The vendor launches the crawls.

Tracking and Prioritisation

We currently use JIRA as our tracking system for crawls. As soon as a crawl is launched a JIRA ticket is set up by our vendors, containing basic information about the crawl. All correspondence between TNA and our vendors about the crawl takes place on the JIRA ticket. TNA marks up the JIRA tickets of any crawls which need to be treated as ‘High Priority’. We add a standard label and a descriptive comment.

Common reasons for a site being considered High Priority include:

- Site content is particularly important to the program (e.g. the main central government website: GOV.UK)

- Site is closing or will be redeveloped soon.

- Site is new and we have not crawled it before.

- Site has been redesigned since the previous crawl

- Some functionality did not work in the previous crawl which should now be fixed following a change to the crawl configuration – this needs to be confirmed during quality assurance checks.

Our supplier will also leave a comment in the ticket if a problem is noticed during the crawl – for example if it is becoming much larger than expected or if the crawler is blocked.

Each site has a parent ticket (task) in JIRA and each individual crawl has a child ticket (sub-task). This enables us to record information which applies to all crawls at parent level and to easily move between individual crawl tickets.

Auto-QA – Crawl Log Analysis (CLA)

After the crawl is complete the first stage of our ‘Auto-QA’ runs. It checks all error codes in the crawl.log file to see whether any of the urls are available in the live web. A list of any available urls is attached to the JIRA ticket. Auto-QA is available to all under the MIT Licence: https://github.com/tna-webarchive/open-auto-qa