Difference between revisions of "Workflow:Web Archiving Quality Assurance (QA) Workflow"

Jump to navigation

Jump to search

ClaireUKGWA (talk | contribs) (Created page with "{{Infobox COW |status=Production |tools=Heritrix, Cathode, Browsertrix, JIRA, Screaming Frog |input=Live website content. |output=Archived website content served from WARC fil...") |

ClaireUKGWA (talk | contribs) |

||

| Line 14: | Line 14: | ||

<!-- Describe your workflow here with an overview of the different steps or processes involved--> | <!-- Describe your workflow here with an overview of the different steps or processes involved--> | ||

| + | |||

| + | <b>Pre-crawl checks</b> | ||

| + | |||

| + | |||

| + | A report is produced from WAMDB, our management database, listing all the websites due to be crawled the following month along with useful information held in the database about each site. | ||

| + | The list is divided between the web archiving team. | ||

| + | The team runs through a list of checks on each website. The aim is to ensure the information we send to the crawler is as complete as possible, so the crawl is ‘right first time’. This helps to avoid additional work and temporal problems which can occur if content is added to the archive later (patching). | ||

| + | |||

| + | Checks include: | ||

| + | * Is the entry url (seed) for the site correct? | ||

| + | * Is the site still being updated or is it ‘dormant’. If it’s not been updated since the previous crawl we’ll postpone the crawl. | ||

| + | * Is there an XML sitemap? Has it changed since the last crawl? | ||

| + | * Update pagination patterns – web crawlers are set to capture sites to a specific number of ‘hops’ from the homepage, if the number of pages in a section is bigger than the number of ‘hops’ we send those links as additional seeds for the crawler. | ||

| + | * Check for new pagination patterns. | ||

| + | * Check for content hosted third party locations (eg. downloads, images). | ||

| + | * Has site been redesigned since previous crawl? | ||

| + | * Are there any specific checks we need to do or information we need to provide at crawl time? | ||

| + | |||

| + | We can also add specific checks for a temporary period. For example, if we need to update the contents of a particular field due to a process change. | ||

| + | |||

| + | |||

==Purpose, Context and Content== | ==Purpose, Context and Content== | ||

Revision as of 14:45, 7 February 2024

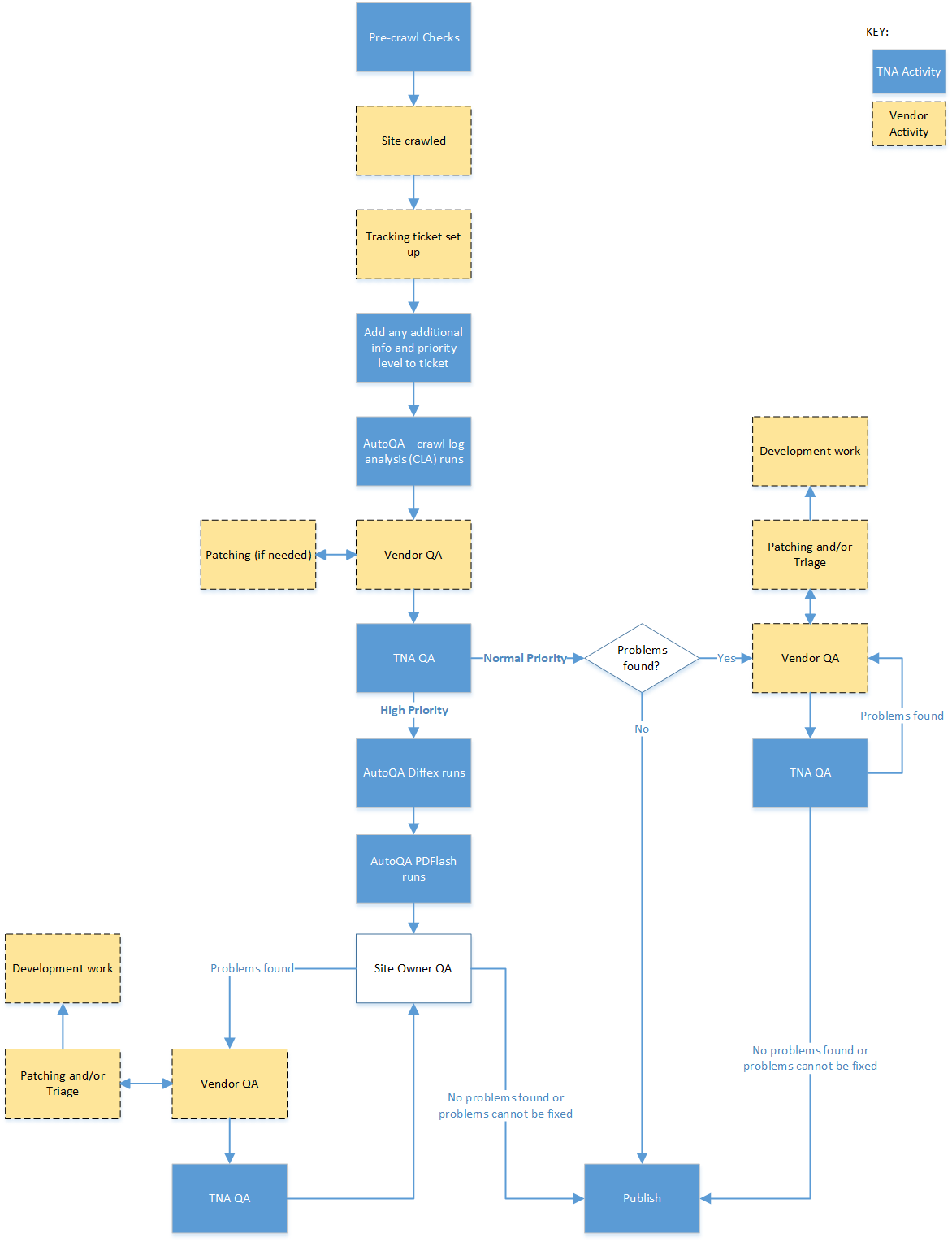

Workflow Description

Pre-crawl checks

A report is produced from WAMDB, our management database, listing all the websites due to be crawled the following month along with useful information held in the database about each site.

The list is divided between the web archiving team.

The team runs through a list of checks on each website. The aim is to ensure the information we send to the crawler is as complete as possible, so the crawl is ‘right first time’. This helps to avoid additional work and temporal problems which can occur if content is added to the archive later (patching).

Checks include:

- Is the entry url (seed) for the site correct?

- Is the site still being updated or is it ‘dormant’. If it’s not been updated since the previous crawl we’ll postpone the crawl.

- Is there an XML sitemap? Has it changed since the last crawl?

- Update pagination patterns – web crawlers are set to capture sites to a specific number of ‘hops’ from the homepage, if the number of pages in a section is bigger than the number of ‘hops’ we send those links as additional seeds for the crawler.

- Check for new pagination patterns.

- Check for content hosted third party locations (eg. downloads, images).

- Has site been redesigned since previous crawl?

- Are there any specific checks we need to do or information we need to provide at crawl time?

We can also add specific checks for a temporary period. For example, if we need to update the contents of a particular field due to a process change.