Difference between revisions of "Workflow:Browsertrix-crawler Workflow"

Jakebickford (talk | contribs) |

Jakebickford (talk | contribs) |

||

| (7 intermediate revisions by the same user not shown) | |||

| Line 20: | Line 20: | ||

<!-- Describe what your workflow is for - i.e. what it is designed to achieve, what the organisational context of the workflow is, and what content it is designed to work with --> | <!-- Describe what your workflow is for - i.e. what it is designed to achieve, what the organisational context of the workflow is, and what content it is designed to work with --> | ||

| − | The purpose of this workflow is determine whether a site is suitable for capture with [https://github.com/webrecorder/browsertrix-crawler Browsertrix Crawler] and if so, run a Browsertrix crawl. The crawl is then subject to Quality Assurance. If the crawl is found to be unsatisfactory Browsertrix settings are adjusted and the crawl is run again, with this process potentially being repeated several times until a satisfactory crawl is completed. If | + | The purpose of this workflow is determine whether a site is suitable for capture with [https://github.com/webrecorder/browsertrix-crawler Browsertrix Crawler] and if so, run a Browsertrix crawl. The crawl is then subject to Quality Assurance. If the crawl is found to be unsatisfactory, Browsertrix settings are adjusted and the crawl is run again, with this process potentially being repeated several times until a satisfactory crawl is completed. If no satisfactory crawl can be made in this way, the site will be captured with Conifer. |

The steps are as follows: | The steps are as follows: | ||

| Line 28: | Line 28: | ||

2. The site is assessed to determine which capture method is suitable. At this point we look at: | 2. The site is assessed to determine which capture method is suitable. At this point we look at: | ||

| − | + | * How large the site is | |

| − | + | * Does the site contain interactive content? | |

| − | + | * What is the planned capture frequency? (if the proposed capture is very frequent we may be more likely to use an in-house tool like Browsertrix to reduce costs) | |

| − | + | * What level of fidelity is required | |

| − | + | * Have previous crawls of the site been attempted and what was the outcome | |

3. An initial decision of what capture technology to use is made | 3. An initial decision of what capture technology to use is made | ||

| + | |||

| + | 4. If Browsertrix is selected, an initial config will be generated based on our knowledge of the site (in our case this would be generated from our in-house database). | ||

| + | |||

| + | 5. An initial Browsertrix is then run, using default settings. | ||

| + | |||

| + | 6. The resulting WARC is uploaded to Conifer for Quality Assurance. | ||

| + | |||

| + | 7. If the level of quality is found to be unsatisfactory, Browsertrix settings will be tweaked, in preparation for running another crawl. At this point we will review settings for: | ||

| + | |||

| + | * Scoping rules | ||

| + | * Seeds | ||

| + | * Depth | ||

| + | * Behaviours | ||

| + | |||

| + | 8. A new Browsertrix crawl will then be run with the new configuration. | ||

| + | |||

| + | 9. This process is repeated until the crawl is found to be satisfactory in QA. If we are unable to complete a satisfactory crawl, at this point we will look at capturing the site with Conifer. | ||

| + | |||

| + | 10. If a satisfactory Browsertrix crawl has been completed, we download the WARC from Conifer. | ||

| + | |||

| + | 11. The WARC is then prepared to be ingested to our collection. For us this involves renaming the file and compressing it. | ||

| + | |||

| + | 12. The WARC is then uploaded to our collection and published. | ||

==Evaluation/Review== | ==Evaluation/Review== | ||

<!-- How effective was the workflow? Was it replaced with a better workflow? Did it work well with some content but not others? What is the current status of the workflow? Does it relate to another workflow already described on the wiki? Link, explain and elaborate --> | <!-- How effective was the workflow? Was it replaced with a better workflow? Did it work well with some content but not others? What is the current status of the workflow? Does it relate to another workflow already described on the wiki? Link, explain and elaborate --> | ||

| + | |||

| + | This workflow is currently being used in production at the UK Government Web Archive. We have found it to be successful with a variety of content types. However, we are still currently refining our process and experimenting with different Browsertrix configurations. | ||

==Further Information== | ==Further Information== | ||

| Line 45: | Line 70: | ||

<!-- Note that your workflow will be marked with a CC3.0 licence --> | <!-- Note that your workflow will be marked with a CC3.0 licence --> | ||

| + | |||

| + | Information on getting started with Browsertrix Crawler can be found on the project's [https://github.com/webrecorder/browsertrix-crawler GitHub page]. | ||

Latest revision as of 17:02, 9 December 2021

Workflow Description

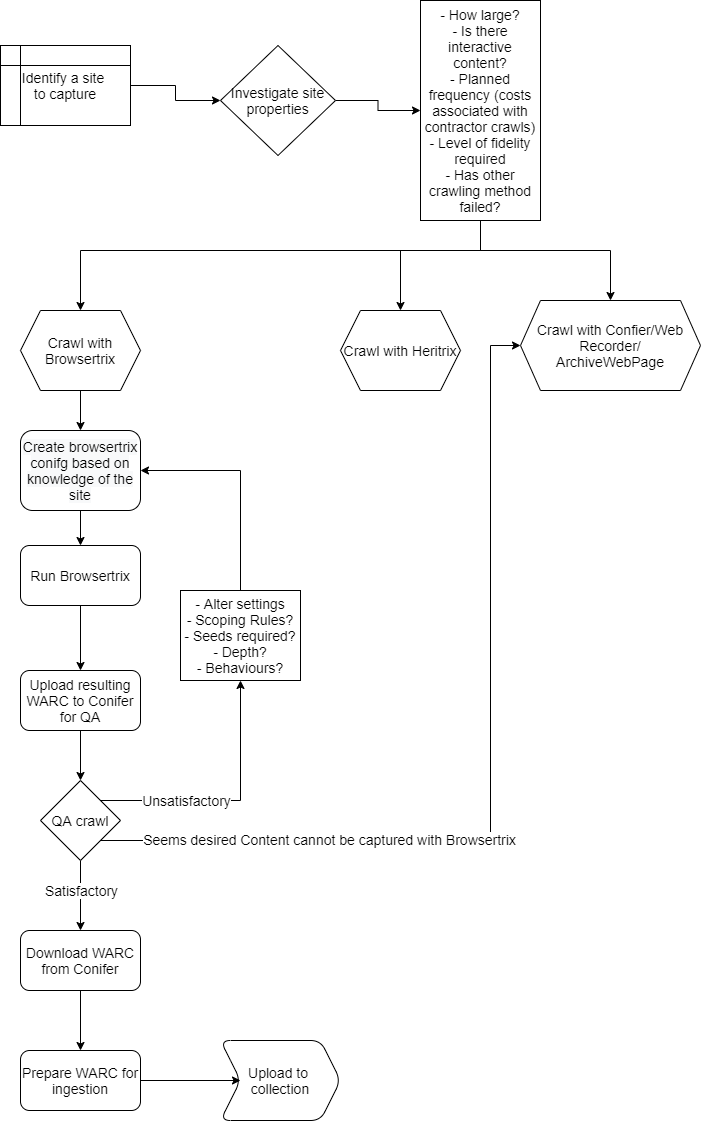

The workflow involves the decision to capture a website with Browsertrix-crawler. It shows the iterative process of crawling a page with Browsertrix, QAing the results in Conifer and recrawling with adjusted settings.

Purpose, Context and Content

The purpose of this workflow is determine whether a site is suitable for capture with Browsertrix Crawler and if so, run a Browsertrix crawl. The crawl is then subject to Quality Assurance. If the crawl is found to be unsatisfactory, Browsertrix settings are adjusted and the crawl is run again, with this process potentially being repeated several times until a satisfactory crawl is completed. If no satisfactory crawl can be made in this way, the site will be captured with Conifer.

The steps are as follows:

1. A site is identified for capture.

2. The site is assessed to determine which capture method is suitable. At this point we look at:

- How large the site is

- Does the site contain interactive content?

- What is the planned capture frequency? (if the proposed capture is very frequent we may be more likely to use an in-house tool like Browsertrix to reduce costs)

- What level of fidelity is required

- Have previous crawls of the site been attempted and what was the outcome

3. An initial decision of what capture technology to use is made

4. If Browsertrix is selected, an initial config will be generated based on our knowledge of the site (in our case this would be generated from our in-house database).

5. An initial Browsertrix is then run, using default settings.

6. The resulting WARC is uploaded to Conifer for Quality Assurance.

7. If the level of quality is found to be unsatisfactory, Browsertrix settings will be tweaked, in preparation for running another crawl. At this point we will review settings for:

- Scoping rules

- Seeds

- Depth

- Behaviours

8. A new Browsertrix crawl will then be run with the new configuration.

9. This process is repeated until the crawl is found to be satisfactory in QA. If we are unable to complete a satisfactory crawl, at this point we will look at capturing the site with Conifer.

10. If a satisfactory Browsertrix crawl has been completed, we download the WARC from Conifer.

11. The WARC is then prepared to be ingested to our collection. For us this involves renaming the file and compressing it.

12. The WARC is then uploaded to our collection and published.

Evaluation/Review

This workflow is currently being used in production at the UK Government Web Archive. We have found it to be successful with a variety of content types. However, we are still currently refining our process and experimenting with different Browsertrix configurations.

Further Information

Information on getting started with Browsertrix Crawler can be found on the project's GitHub page.