Difference between revisions of "Workflow:Workflow for ingesting digitized books into a digital archive"

(Created page with "Upload file (Toolbox on left) and add a workflow image here or remove Category:COW Workflows ==Workflow Description== <div class="toccolours mw-coll...") |

(typo fix) |

||

| (9 intermediate revisions by 3 users not shown) | |||

| Line 1: | Line 1: | ||

| − | + | {{Infobox COW | |

| − | + | |status=Testphase | |

| − | + | |tools=7-Zip, DROID (Digital Record Object Identification), FITS (File Information Tool Set), Fedora Commons, DocuTeam Feeder, cURL, Saxon, ClamAV | |

| + | |input=Digitized content | ||

| + | |output=Packages of digitized content ready for ingest | ||

| + | |organisation=Universitätsbibliothek Bern | ||

| + | |organisationurl=http://www.unibe.ch/university/services/university_library/ub/index_eng.html | ||

| + | |name=Ingest of digitized books | ||

| + | }} | ||

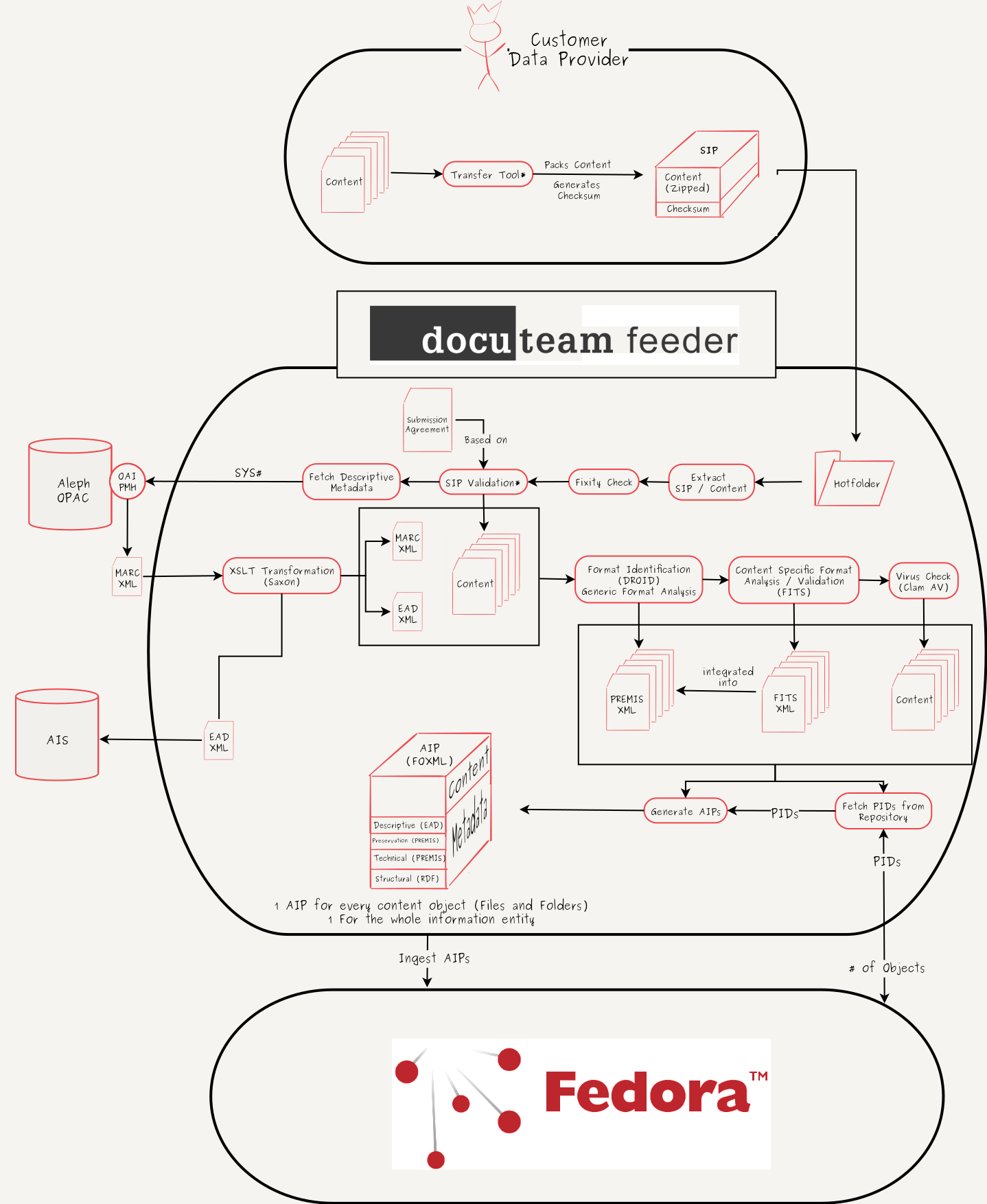

==Workflow Description== | ==Workflow Description== | ||

<div class="toccolours mw-collapsible mw-collapsed" data-expandtext="Show Diagram" data-collapsetext="Hide Diagram" > | <div class="toccolours mw-collapsible mw-collapsed" data-expandtext="Show Diagram" data-collapsetext="Hide Diagram" > | ||

| Line 7: | Line 13: | ||

</div> | </div> | ||

| − | # The data provider provides | + | # The data provider provides their content as an input for the transfer tool (currently in development). |

| − | # The transfer tool creates a zip-container with the content and calculates a checksum of the container | + | # The transfer tool creates a zip-container with the content and calculates a checksum of the container. |

| − | # The zip-container and the checksum are bundled (another zip-container or a plain folder) and | + | # The zip-container and the checksum are bundled (another zip-container or a plain folder) and form the SIP together. |

| − | # The transfer tool moves the SIP to a registered, data provider specific hotfolder, which is connected to the ingest server | + | # The transfer tool moves the SIP to a registered, data provider specific hotfolder, which is connected to the ingest server. |

| − | # As soon as the complete SIP has been transfered to the ingest server, a trigger is raised and the ingest workflow starts | + | # As soon as the complete SIP has been transfered to the ingest server, a trigger is raised and the ingest workflow starts. |

| − | # The SIP gets unpacked | + | # The SIP gets unpacked. |

| − | # The | + | # The zip container that contains the content is validated according to the provided checksum. If this fixity check fails, the data provider is asked to reingest their data. |

| − | # The content and the structure of the content are validated against the submission agreement, that was signed with the data provider (this step is currently in development) | + | # The content and the structure of the content are validated against the submission agreement, that was signed with the data provider (this step is currently in development). |

| − | # Based on | + | # Based on an unique id (encoded in the content filename) descriptive metadata is fetched from the library's OPAC over an OAI-PMH interface. |

# The OPAC returns a MARC.XML-file. | # The OPAC returns a MARC.XML-file. | ||

| − | # The MARC.XML-file is mapped | + | # The MARC.XML-file is mapped to an EAD.XML-file by a xslt-transformation. |

# The EAD.XML is exported to a designated folder for pickup by the archival information system | # The EAD.XML is exported to a designated folder for pickup by the archival information system | ||

| − | # Every content file is analysed by DROID for format identification and basic technical metadata is extracted (e.g. filesize) | + | # Every content file is analysed by DROID for format identification and basic technical metadata is extracted (e.g. filesize). |

| − | # The output of this analysis is | + | # The output of this analysis is written to a PREMIS.XML-file (one PREMIS.XML per content object). |

# Every content file is validated and analysed by FITS and content specific technical metadata is extracted. | # Every content file is validated and analysed by FITS and content specific technical metadata is extracted. | ||

# The output of this analysis (FITS.XML) is integrated into the existing PREMIS.XML-files. | # The output of this analysis (FITS.XML) is integrated into the existing PREMIS.XML-files. | ||

# Each content file is scanned for viruses and malware by Clam AV. | # Each content file is scanned for viruses and malware by Clam AV. | ||

| − | # For each content object and for the whole information entity (the book) a PID is fetched from the repository | + | # For each content object and for the whole information entity (the book) a PID is fetched from the repository. |

| − | # For each content object and for the whole information entity an AIP is generated. This process includes the generation of RDF | + | # For each content object and for the whole information entity an AIP is generated. This process includes the generation of RDF triples, that contain the relationships between the objects. |

| − | # The AIPs are ingested into the repository | + | # The AIPs are ingested into the repository. |

| + | # The data producer gets informed that the ingest finished successfully. | ||

| − | + | The tools and their function in the workflow: | |

| − | |||

| − | |||

* [[7-Zip]] - Pack and unpack content / SIPs | * [[7-Zip]] - Pack and unpack content / SIPs | ||

* [http://www.docuteam.ch/en/products/it-for-archives/software/ docuteam feeder] - Workflow- and Ingestframework | * [http://www.docuteam.ch/en/products/it-for-archives/software/ docuteam feeder] - Workflow- and Ingestframework | ||

| Line 39: | Line 44: | ||

* [https://en.wikipedia.org/wiki/Clam_AntiVirus Clam AV] - Virus check | * [https://en.wikipedia.org/wiki/Clam_AntiVirus Clam AV] - Virus check | ||

* [[Fedora_Commons]] - Digital Repository | * [[Fedora_Commons]] - Digital Repository | ||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

==Purpose, Context and Content== | ==Purpose, Context and Content== | ||

<!-- Describe what your workflow is for, what the organisational context of the workflow is, and what content it is designed to work with --> | <!-- Describe what your workflow is for, what the organisational context of the workflow is, and what content it is designed to work with --> | ||

| + | The University Library of Bern digitizes historical books, maps and journals that are part of the "Bernensia" collection (Media about or from authors from the state and city of Bern). The digital object resulting from this digitization process are unique and therefore of great value for the library. To preserve them for the longterm, these objects have to be ingested into the digital archive of the libray. The main goal of this workflow is to provide an easy interface to the data provider (digitization team), to provide a simple gateway to the digital archive and to collect the as much metadata as is available in an automated way. | ||

==Evaluation/Review== | ==Evaluation/Review== | ||

<!-- How effective was the workflow? Was it replaced with a better workflow? Did it work well with some content but not others? What is the current status of the workflow? Does it relate to another workflow already described on the wiki? Link, explain and elaborate --> | <!-- How effective was the workflow? Was it replaced with a better workflow? Did it work well with some content but not others? What is the current status of the workflow? Does it relate to another workflow already described on the wiki? Link, explain and elaborate --> | ||

| + | A subset of the workflow has been setup and tested as a proof of concept on a prototype system. It will be installed and extended in a productive environment shortly . | ||

| Line 58: | Line 60: | ||

<!-- Add four tildes below ("~~~~") to create an automatic signature, including your wiki username. Ensure your user page (click on your username to create it) includes an up to date contact email address --> | <!-- Add four tildes below ("~~~~") to create an automatic signature, including your wiki username. Ensure your user page (click on your username to create it) includes an up to date contact email address --> | ||

| + | [[User:ChrisReinhart|ChrisReinhart]] ([[User talk:ChrisReinhart|talk]]) 14:00, 7 April 2017 (UTC) | ||

<!-- Note that your workflow will be marked with a CC3.0 licence --> | <!-- Note that your workflow will be marked with a CC3.0 licence --> | ||

Latest revision as of 11:15, 29 April 2021

Workflow Description[edit]

- The data provider provides their content as an input for the transfer tool (currently in development).

- The transfer tool creates a zip-container with the content and calculates a checksum of the container.

- The zip-container and the checksum are bundled (another zip-container or a plain folder) and form the SIP together.

- The transfer tool moves the SIP to a registered, data provider specific hotfolder, which is connected to the ingest server.

- As soon as the complete SIP has been transfered to the ingest server, a trigger is raised and the ingest workflow starts.

- The SIP gets unpacked.

- The zip container that contains the content is validated according to the provided checksum. If this fixity check fails, the data provider is asked to reingest their data.

- The content and the structure of the content are validated against the submission agreement, that was signed with the data provider (this step is currently in development).

- Based on an unique id (encoded in the content filename) descriptive metadata is fetched from the library's OPAC over an OAI-PMH interface.

- The OPAC returns a MARC.XML-file.

- The MARC.XML-file is mapped to an EAD.XML-file by a xslt-transformation.

- The EAD.XML is exported to a designated folder for pickup by the archival information system

- Every content file is analysed by DROID for format identification and basic technical metadata is extracted (e.g. filesize).

- The output of this analysis is written to a PREMIS.XML-file (one PREMIS.XML per content object).

- Every content file is validated and analysed by FITS and content specific technical metadata is extracted.

- The output of this analysis (FITS.XML) is integrated into the existing PREMIS.XML-files.

- Each content file is scanned for viruses and malware by Clam AV.

- For each content object and for the whole information entity (the book) a PID is fetched from the repository.

- For each content object and for the whole information entity an AIP is generated. This process includes the generation of RDF triples, that contain the relationships between the objects.

- The AIPs are ingested into the repository.

- The data producer gets informed that the ingest finished successfully.

The tools and their function in the workflow:

- 7-Zip - Pack and unpack content / SIPs

- docuteam feeder - Workflow- and Ingestframework

- cURL - Fetch MARC.XML per http

- Saxon - MARC.XML to EAD.XML mapping

- DROID - Format Identification and generation of basic technical metadata

- FITS_(File_Information_Tool_Set) - Generation of specific, content aware technical metadata

- Clam AV - Virus check

- Fedora_Commons - Digital Repository

Purpose, Context and Content[edit]

The University Library of Bern digitizes historical books, maps and journals that are part of the "Bernensia" collection (Media about or from authors from the state and city of Bern). The digital object resulting from this digitization process are unique and therefore of great value for the library. To preserve them for the longterm, these objects have to be ingested into the digital archive of the libray. The main goal of this workflow is to provide an easy interface to the data provider (digitization team), to provide a simple gateway to the digital archive and to collect the as much metadata as is available in an automated way.

Evaluation/Review[edit]

A subset of the workflow has been setup and tested as a proof of concept on a prototype system. It will be installed and extended in a productive environment shortly .

Further Information[edit]

ChrisReinhart (talk) 14:00, 7 April 2017 (UTC)